Upskilling privacy professionals

Upskilling Privacy Professionals for Real-World Business Impact Privacy and AI work remain demanding, and the value of a privacy professional […]

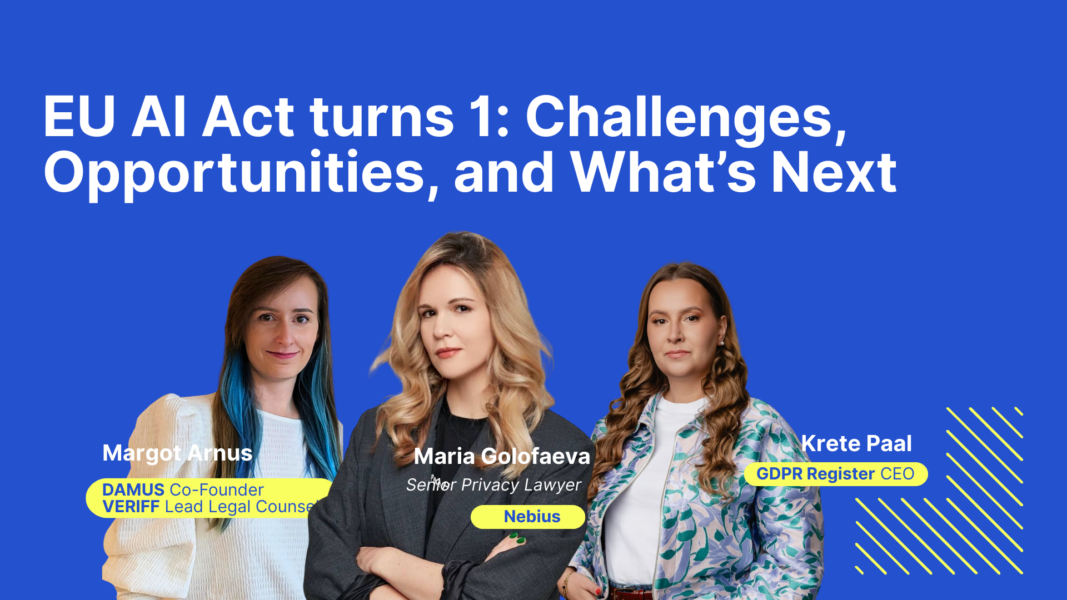

In this webinar, Krete Paal (CEO of GDPR Register) is joined by Maria Golofaeva (Nebius) and Margot Arnus (Veriff) to explore a question many organisations are now asking: is the Data Protection Officer becoming the de facto AI compliance lead? The discussion focuses on real-world implementation—how to organise AI governance, align it with GDPR compliance, and prepare for the EU AI Act without slowing down innovation.

Because AI relies heavily on data (often personal data), privacy teams are well placed to lead or co-lead AI compliance. The speakers highlight that DPOs already have experience operating in grey areas, building accountability frameworks, and balancing risk-based decision-making—skills that translate naturally into AI risk management and AI governance.

The panel shares a pragmatic approach for organisations building AI compliance capability:

A recurring theme is the tension between business goals and legal requirements. AI introduces complex systems that can be difficult to explain, which makes classic GDPR principles—transparency, purpose limitation, and data minimisation—harder to apply in practice. The panel emphasises that the aim is not to block AI, but to enable responsible use with clear guardrails.

Both speakers stress that AI compliance fails when legal and technical teams work in isolation. Success depends on creating a shared language and a “same room” approach: asking basic questions, mapping data flows, and agreeing what the organisation considers “AI” in practical terms. The advice is simple and memorable: ask the ‘stupid’ questions early—once—rather than staying uncertain for months.

AI regulation can trigger internal panic (“we cannot use AI anymore”). The panel argues for the opposite: understand what is actually regulated and communicate it clearly—internally and externally. Strong governance can also become a competitive advantage, helping privacy and legal teams secure resources by framing compliance as trust, differentiation, and market readiness, not just cost.

Rather than starting from zero, the webinar recommends using GDPR documentation as a baseline and adding AI-specific sections. GDPR already demands clarity, documentation, and understandable transparency—making it a strong template for AI compliance. Practical examples include:

To keep track of AI tools (including hidden AI features), the panel recommends integrating AI questions into supplier assessments and ongoing monitoring. Key checks include:

For generative AI and LLMs, the same tool can support many use cases—so organisations need both vendor controls and internal tracking of how teams actually use the tool.

The speakers discuss real incidents (such as bias in recruitment tooling and misclassification in image systems) to underline why AI bias is not only unethical but may also be unlawful. They note that the EU AI Act offers clearer signals on what is prohibited, but organisations still need internal principles, stakeholder buy-in, and processes for bias mitigation—often in tension with data minimisation.

AI governance goes beyond personal data. The panel recommends clear internal policies that classify:

Practical mitigations include restricting sensitive data in AI tools, using filters, and considering synthetic data or de-identification strategies to reduce exposure.

The webinar recognises that AI can support privacy teams—provided human oversight remains in place. Examples include drafting policies, brainstorming, and accelerating RoPA inputs by generating structured descriptions of business processes (purpose, data categories, vendors) that colleagues can review and refine.

The panel’s conclusion is nuanced: DPOs are often well positioned, but it depends on company size, resources, and the scale of AI use. AI compliance is best treated as a team effort, with privacy acting as a key driver of ongoing monitoring, accountability, and practical governance.

Be cautious, but curious. AI is moving fast, regulation is evolving, and organisations that keep learning, keep documenting, and keep cross-functional conversations active will be best placed to use AI responsibly—and competitively.

Krete Paal is the CEO of GDPR Register and has extensive experience in privacy management and regulatory compliance. She works closely with organisations across sectors to operationalise GDPR requirements and translate complex regulatory obligations into practical, scalable processes—now increasingly in the context of AI governance.

Maria Golofaeva is a Data Protection Officer at Toloka, where she advises on privacy and data protection matters in data-intensive and AI-driven environments. Her work focuses on navigating GDPR compliance challenges in advanced data processing operations, including issues related to AI training, data quality, and risk mitigation.

Margot Arnus is Senior Privacy & Product Legal Counsel at Veriff, advising product and engineering teams on privacy-by-design, AI-enabled product development, and regulatory compliance. She brings hands-on experience in aligning fast-moving product innovation with GDPR, ethical considerations, and emerging AI regulation.